The first search engine for leads

Leadsforge is the very first search engine for leads. With a chat-like, easy interface, getting new leads is as easy as texting a friend! Just describe your ideal customer in the chat - industry, role, location, or other specific criteria - and our AI-powered search engine will instantly find and verify the best leads for you. No more guesswork, just results. Your lead lists will be ready in minutes!

Beginners in AI

Thank you for joining us again!

Welcome to this week's edition of Beginners in AI, where we explore the latest trends, tools, and news in the world of AI and the tech that surrounds it. Like all editions, this is human curated and published with the intention of making AI news and technology more accessible to everyone.

This week, we're looking into a fascinating research into why the best thinking models are not ready to make life and death decisions. Meta just dropped a new set of LLaMA 4 models to compete with DeepSeek and GPT-4, while Midjourney finally launched a long-awaited upgrade to its image generation engine. Meanwhile, Runway’s generative video tools just scored $308 million in funding. We also check out a chatbot grading U.S. senators on safety. And over in robotics, Japan unveils a next-gen robot horse that’s part mountain climber, part machine companion — and all future.

Read Time: 6 minutes

AI TOP STORY

Anthropic Finds AI Isn't Faithful and Still Cannot Be Trusted

The Research at a Glance

Anthropic’s recent research explores an important limitation in "reasoning models" like Claude 3.7 Sonnet and DeepSeek R1, which use detailed explanations known as "Chain-of-Thought" alongside their final answers. While these explanations help AI solve more complicated problems and assist researchers in detecting undesirable behaviors, there’s a significant issue: the Chains-of-Thought are often untrustworthy. The models frequently omit critical details from their reasoning—even when explicitly influenced by provided hints—revealing their explanations don't reliably reflect their genuine thought processes.

“Overall, our results point to the fact that advanced reasoning models very often hide their true thought processes, and sometimes do so when their behaviors are explicitly misaligned.”

Why It Matters in AI Alignment and Communication

The findings suggest that these models may be relying on statistical shortcuts and surface-level patterns rather than genuinely modeling what we’d call “thinking.” For example, while a model might answer correctly, it doesn't articulate the reasoning process transparently, making it hard to align with human values or expectations. Anthropic emphasizes that this issue could create problems in high-stakes domains like legal or medical advice, where showing one’s reasoning is as important as getting the right answer.

What It Means for You

Whether you're building with AI, learning about it, or using it in everyday tools, this research spotlights a key challenge: can we trust AI that doesn’t “show its work”? As these systems increasingly guide decisions in business, policy, and personal life, transparency in their reasoning will move from useful to life altering. Anthropic’s research pushes the industry to rethink what good reasoning looks like in AI—and how to make models that don’t just predict, but also reflect.

LAST WEEK IN AI AND TECH

What’s a ChatGPT? Is AI Making Us Dumber?

A new wave of research suggests that humans may be at risk of cognitive decline as we increasingly lean on AI tools for thinking, remembering, and decision-making. The phenomenon, nicknamed “cognitive offloading,” refers to our tendency to trust machines with tasks we’d normally do ourselves — from solving problems to recalling facts. While it can boost productivity, experts warn that excessive dependence on chatbots and automation could dull critical thinking and memory skills over time. It's not that AI is forgetting — we might be. A similar phenomenon was observed with GPS and the crippling of spatial memory.

Read More

Runway Raises the Curtain (and $308M) for its AI video tools

Runway, the startup famous for generative video tools like Gen-2, just raised a massive $308M round led by prominent VCs betting big on AI media. Their platform lets users generate entire short videos from a single prompt and now includes editing, animation, and sound tools. The funding signals rising investor confidence in synthetic media as a future creative engine, especially as demand surges from advertisers, indie creators, and game studios.

Read More

Llama Drama-Meta’s answer to DeepSeek: LLaMA 4 launches

Meta just released its LLaMA 4 language model suite, and it’s more than just a llama in a lab coat. With enhanced context windows and two new fine-tuned variants — LLaMA Scout (optimized for long-context reasoning) and LLaMA Maverick (geared for open-ended creative tasks) — the release positions Meta as a major player in the AI arms race. Even more ambitious: Meta teased an upcoming 2 trillion parameter model in pre-training, aiming to compete with GPT-5 and Gemini. The LLaMA lineup is now open source, raising questions about safety, openness, and rapid experimentation in AI development. It is also reported to have a draw dropping 10-million-token context window.

Read More

Senator, I’m Just a Chatbot-Senators' Safety Records Evaluated by AI

In a wild political twist, an AI chatbot has been trained to analyze and summarize U.S. senators’ voting records on public safety. The model assigns color-coded safety “grades” based on votes tied to gun laws, policing, and criminal justice. Critics argue that while the bot provides transparency, it oversimplifies nuanced political positions. Supporters see it as a glimpse into how AI might improve civic literacy. Either way, it’s clear that machine learning is moving from parsing cat photos to parsing congressional integrity.

Read More

Midjourney’s Long-Awaited Brushstroke— First New Model in Nearly a Year

After a long creative silence, Midjourney has released its Version 6 image model, boasting vastly improved hands, facial structure, and real-world detail. The update finally lets users generate photo-realistic content with fewer prompts, alongside a more intuitive editing experience. Plus, new fine-tuning features allow artists to “nudge” results mid-process. For creatives, it's like Photoshop and imagination had a baby—and it’s growing fast.

Read More

AI is a mirror. It reflects us — sometimes flatteringly, sometimes uncomfortably.

TECH TERMS TO KNOW

Interpretability is the degree to which a human can understand the cause behind an AI model’s decision or prediction.

TOOL SPOTLIGHT (non-sponsored)

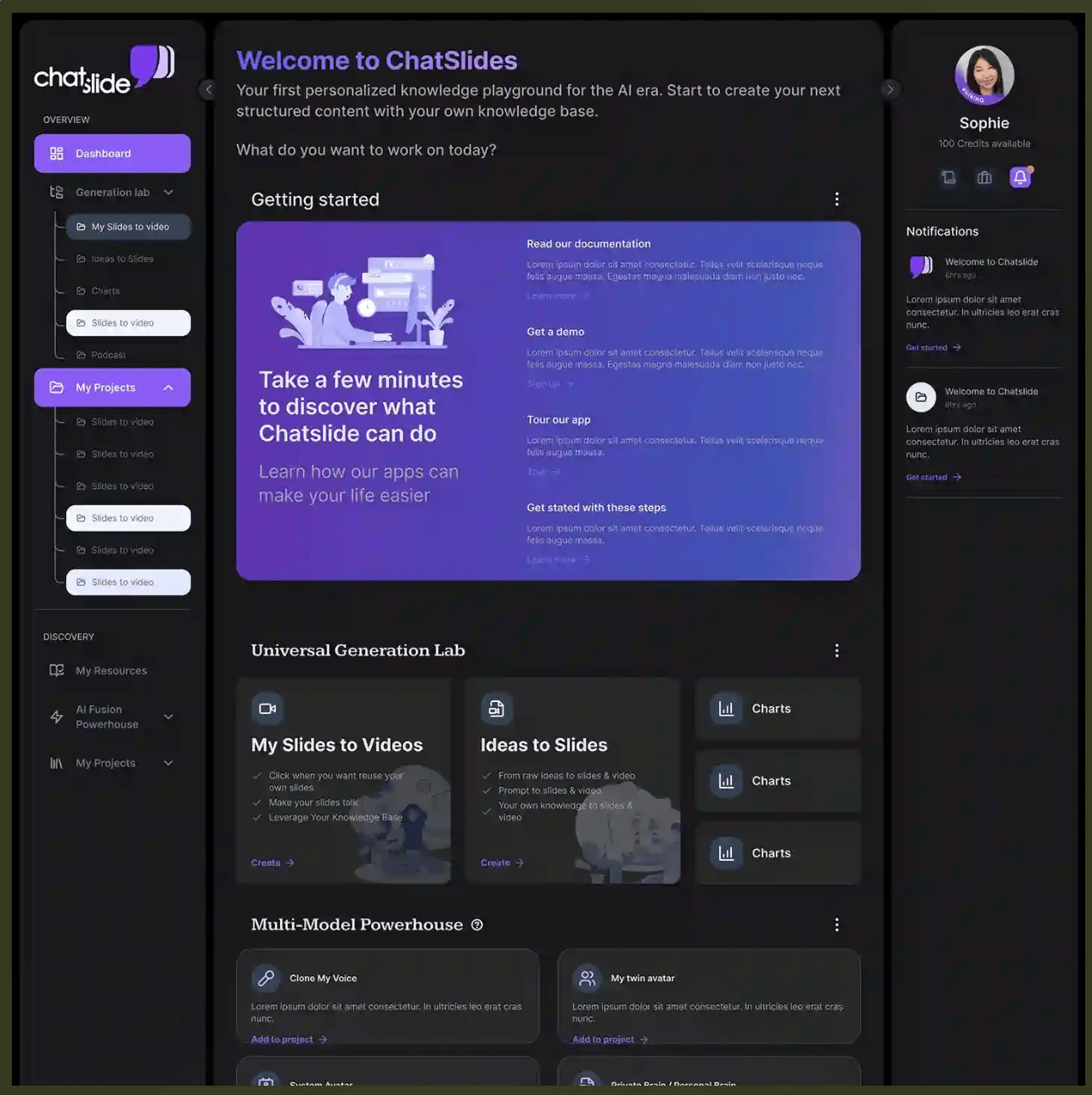

Chatside.ai is an AI-powered tool that creates professional presentations, slides, and videos with just one click on any topic. It allows users to generate content from various sources including webpages, PDFs, documents, videos, and social media posts. Great for educators, marketers, content creators, and business professionals who need to create engaging visual content quickly.

The software streamlines content creation by:

Converting various content types into visually appealing presentations

Generating slides with customizable branding

Creating videos with voiceovers and digital avatars

Offering voice cloning capabilities

ROBOTICS AND AI

Meet Corleo, by Kawasaki, the futuristic robot horse from Japan that’s part SUV, part sci-fi steed. Built for rugged terrain and high endurance, Corleo is designed to transport goods and humans through environments that would challenge even 4x4 vehicles. With a quadrupedal gait inspired by real horses, it can climb stairs, trek steep hills, and even balance with shocking agility. While this is still in the concept stage, the advancement of humanoid robot technology will aid in the parallel development for other machines types.

TRY THIS PROMPT (copy and paste into ChatGPT, Grok, Perplexity, Gemini, Claude)

Simulate a negotiation scenario where I'm trying to [achieve a specific goal]. Provide real-time feedback on my communication style and negotiation tactics. Then, utilizing tactics from the top negotiators in that field, tell me what could have been done better.DID YOU KNOW?

Meta’s upcoming LLaMA model may reach 2 trillion parameters — that’s more than 20x the size of GPT-2. These parameters act like digital "neurons," helping the model learn patterns. More neurons usually mean more capability — but also more risk of confusion or hallucination.

30 Referrals: Lifetime access to all Beginners in AI videos and courses

AI-ASSISTED IMAGE OF THE WEEK

By Zenchilada

Interested in stock trading, but no clue where to start? Sign-up for our sister newsletter launched this month. Each day will build onto the next using current news as a learning aid.

Thank you for reading. We’re all beginners in something. With that in mind, your questions and feedback are always welcome and I read every single email!

-James

By the way, this is the link if you liked the content and want to share with a friend.