Simplify Training with AI-Generated Video Guides

Simplify Training with AI-Generated Video Guides

Are you tired of repeating the same instructions to your team? Guidde revolutionizes how you document and share processes with AI-powered how-to videos.

Here’s how:

1️⃣ Instant Creation: Turn complex tasks into stunning step-by-step video guides in seconds.

2️⃣ Fully Automated: Capture workflows with a browser extension that generates visuals, voiceovers, and call-to-actions.

3️⃣ Seamless Sharing: Share or embed guides anywhere effortlessly.

The best part? The browser extension is 100% free.

Beginners in AI

Good morning and thank you for joining us again!

Welcome to this daily edition of Beginners in AI, where we explore the latest trends, tools, and news in the world of AI and the tech that surrounds it. Like all editions, this is human curated, and published with the intention of making AI news and technology more accessible to everyone.

THE FRONT PAGE

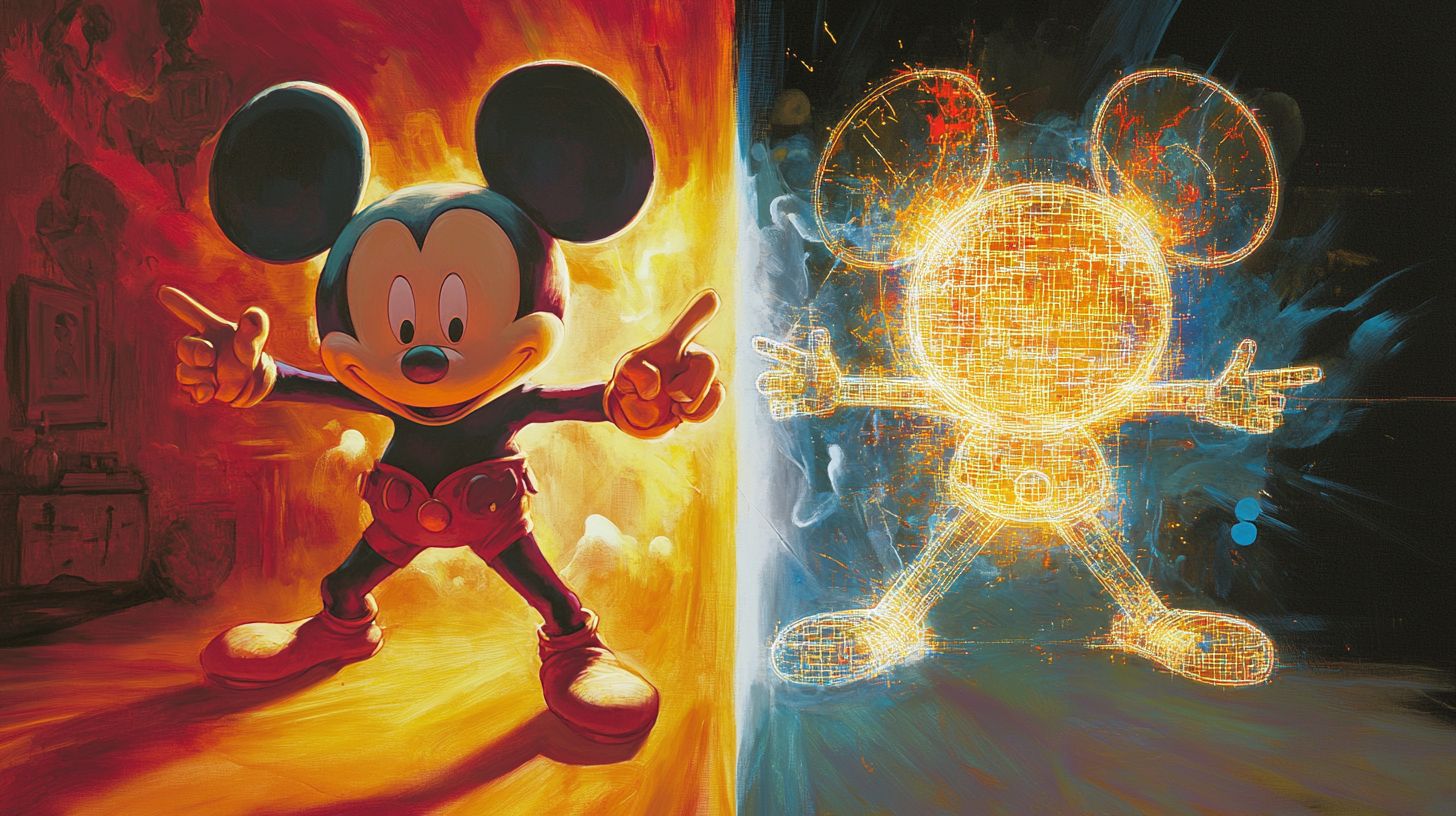

Mickey Mouse Is Coming to ChatGPT’s Sora — With Disney's Blessing

TLDR: Disney is investing $1 billion in OpenAI and licensing over 200 characters, from Darth Vader to Cinderella, for use in Sora's AI video generator, marking the first major Hollywood studio to embrace the technology it spent years fighting.

The Story:

Disney announced a three-year licensing agreement that will let Sora users generate short videos featuring characters from Disney, Marvel, Pixar, and Star Wars starting in early 2026. The deal covers animated and creature characters only, with no actor likenesses or voices included. You can make a video of Yoda, but not one that sounds like Frank Oz. Curated fan videos will stream directly on Disney+. Beyond the licensing deal, Disney becomes a major customer of OpenAI, using its APIs to build new tools and rolling out ChatGPT to employees. The investment includes warrants to buy additional equity. Notably, OpenAI won't be allowed to train its models on Disney's IP, a significant carveout given ongoing lawsuits over AI training data.

Its Significance:

This is a 180-degree turn. Just months ago, Disney sued Midjourney for what it called a "bottomless pit of plagiarism," and the Motion Picture Association demanded OpenAI take "immediate action" against copyright infringement on Sora after its October launch. Now Disney is essentially saying: if AI video generation is inevitable, we'd rather monetize it than fight it endlessly. The deal creates a template other studios will likely follow. Altman has hinted he's fielding more IP licensing requests. But it raises uncomfortable questions. Disney sent Google a cease-and-desist letter the day before this announcement, alleging "massive scale" copyright infringement. Same technology, different check-writers, wildly different treatment. For consumers, this opens a new kind of creative playground. You'll be able to make a short video of Stitch learning to skateboard or Darth Vader dancing, legally and with studio blessing. Whether that's a fun novelty or the start of user-generated content replacing professionally made entertainment depends on where this goes next.

QUICK TAKES

The story: AI video company Runway launched GWM-1, its first "world model"—an AI system that learns how the physical world works to simulate future events. The model comes in three versions: GWM-Worlds (creates explorable 3D environments), GWM-Robotics (trains robots in simulated scenarios), and GWM-Avatars (generates realistic human avatars). The simulations run at 24 fps and 720p, with an understanding of geometry, physics, and lighting. Runway also updated its Gen 4.5 video model with native audio and multi-shot generation up to one minute.

Your takeaway: Runway is moving beyond Hollywood video effects into robotics and simulation training. World models could let companies train robots and AI agents in safe, cheap virtual environments instead of the real world.

The story: OpenAI released GPT-5.2 on December 12, just weeks after GPT-5.1. The update comes in three versions: Instant (quick tasks), Thinking (complex multi-step work), and Pro (intensive research). OpenAI introduced a new benchmark called GDPval that simulates tasks across 44 jobs, claiming GPT-5.2 matched or beat human performance in about 71% of comparisons. API pricing sits at $1.75 per million input tokens and $14 per million output tokens.

Your takeaway: OpenAI is racing to keep up with Google's Gemini 3 after an internal "code red" pushed development forward. The focus on professional tasks like spreadsheets and presentations shows AI tools are moving beyond chatbots into everyday work software.

The story: Google released a "reimagined" version of its Gemini Deep Research agent built on Gemini 3 Pro. The new tool lets developers embed Google's research capabilities into their own apps through a new Interactions API. Google also open-sourced a new benchmark called DeepSearchQA to test how well AI handles complex, multi-step research. The agent will soon integrate into Google Search, Google Finance, the Gemini App, and NotebookLM.

Your takeaway: Google and OpenAI dropped major updates on the same day in what's becoming a weekly AI release battle. For users, this means more powerful research tools that can handle hours of work in minutes.

The story: Google Labs announced Disco, an experimental browser featuring "GenTabs" powered by Gemini 3. GenTabs creates interactive web applications based on your open tabs and chat history—no coding required. Examples include trip planners with maps and calendars, meal planners, and 3D educational tools. A waitlist is now open for macOS users.

Your takeaway: Google is testing what the browser of the future might look like. Instead of juggling dozens of tabs, AI could build custom mini-apps on the fly to help you research, plan, and organize.

The story: Kilo Code, an open-source AI coding agent, raised $8 million in seed funding led by Cota Capital. The company was co-founded by Scott Breitenother (founder of Brooklyn Data) and Sid Sijbrandij (GitLab's co-founder and executive chair). Since launching in early 2025, Kilo has crossed 750,000 downloads, hit #1 on OpenRouter, and processes over 6 trillion tokens monthly. GitLab has a right of first refusal to acquire Kilo until August 2026 for just $1,000.

Your takeaway: With GitLab's co-founder backing an open-source competitor to Cursor and GitHub Copilot, the AI coding tool market is heating up. The unusual $1,000 buyout clause suggests GitLab is keeping its options open.

TOOLS ON OUR RADAR

⌨️ Text Blaze

Freemium: Create keyboard shortcuts that instantly expand into full emails, responses, and templates you use every day.🎤 Fathom

Freemium: Record and transcribe your meetings automatically, then get AI summaries with action items in seconds.🖼️ Stockimg AI

Freemium: Generate custom stock photos, logos, and graphics from simple text descriptions without any design skills.⏱️ Clockify

Free: Track time across projects and clients for free with unlimited users and detailed reports.

TRENDING

Google Rolls Out Gemini for Chrome on iOS – iPhone users in the US can now use Gemini directly in Chrome. The AI assistant replaces the Google Lens icon and can summarize pages, answer questions about what you're viewing, and search your screen.

OpenAI Releases Open-Weight Safety Models – OpenAI released gpt-oss-safeguard, two open-weight models (120B and 20B parameters) that let developers set their own safety policies. Unlike fixed safety filters, these models reason about any policy you give them at runtime.

Amazon's AI-Generated Fallout Recap Gets Basic Facts Wrong – Amazon's new AI recap feature for Prime Video made several errors summarizing Fallout Season 1, including incorrectly stating flashbacks were set in the 1950s when they actually took place in 2077. The video was pulled after criticism.

Asian Bishops Draft AI Ethics Guidelines – Asian Catholic bishops met in Hong Kong to create the first regional Church guidelines on AI. Cardinal Stephen Chow called AI a "gift from God" while noting the need for ethical boundaries around the technology.

AI Leaders Eye Continual Learning as Next Breakthrough – AI labs are racing to build models that keep learning after training ends. Google introduced "Nested Learning" at NeurIPS 2025, treating models as layered systems that update at different speeds—similar to how the human brain works.

TRY THIS PROMPT (copy and paste into ChatGPT, Grok, Perplexity, Gemini)

Crisis Decision Protocol: Make fast, high-stakes decisions under pressure using military-grade decision frameworks

Build me an interactive Crisis Decision Protocol as a React artifact that guides rapid decision-making during high-pressure situations using proven emergency response frameworks.

The console should include these sections:

1. **Crisis Alert Setup** - Initial assessment:

• Crisis type selector (quick categorization):

- Business Crisis (PR disaster, security breach, competitor threat)

- Operational Crisis (system failure, supply chain break, key person loss)

- Financial Crisis (cash flow, investment loss, fraud)

- Personal Crisis (health emergency, relationship, legal)

- Team Crisis (conflict, resignation, performance)

• Severity level: 1-5 (Minor → Existential)

• Time pressure indicator: Hours, Days, Weeks available

• **SOP Library** section:

- "Upload SOP" button (accepts PDF, DOCX, MD, TXT)

- Shows list of uploaded procedures

- "Load My SOP" button to auto-populate protocol with your existing procedures

- Extract key actions, checklists, and stakeholder lists from SOP

- Override default framework with company-specific protocols

• Large red "INITIATE PROTOCOL" button

• Crisis timer starts counting up when initiated

2. **OODA Loop Dashboard** - Rapid assessment cycle:

• Four-quadrant layout for military OODA Loop:

**OBSERVE (Gather Facts)**

- What is happening right now? (text input)

- Who is affected? (stakeholder list)

- What are the immediate threats?

- Key data points/metrics showing the crisis

- "Search Crisis News" button for real-time info

**ORIENT (Analyze Context)**

- What patterns/precedents exist? (AI suggestions)

- What are our constraints? (time, money, resources)

- What biases might cloud judgment?

- Stakeholder impact matrix (visual grid)

**DECIDE (Choose Action)**

- Generate 3-5 response options (AI-assisted)

- Pros/cons for each option

- Risk assessment per option

- Speed of impact rating

- Recommended choice highlighted

**ACT (Execute & Monitor)**

- Action checklist with assignments

- Communication plan (who to tell, what to say)

- Success metrics to monitor

- Contingency triggers (if X happens, do Y)

3. **Decision Tree Navigator** - Branching logic:

• Start with primary decision question

• Yes/No or multiple choice branches

• Each branch leads to next question or action

• Visual tree showing all paths

• "Fast-forward" to likely scenarios

• Dead-end warnings (paths with no good outcome)

• Highlight recommended path in green

• "How did we get here?" backtrack view

4. **Countdown Timer & Checklist** - Execution tracker:

• Large countdown clock (time until decision must be made)

• Color changes as time runs low (green → yellow → red)

• Critical action checklist:

- Immediate containment actions

- Key people to notify

- Systems to lock down/activate

- External communications needed

- Legal/compliance requirements

• Checkbox completion with timestamps

• "Mark as Done" satisfying animations

• Override button if situation changes

5. **Stakeholder Impact Matrix** - Who's affected:

• Grid showing stakeholder groups vs. impact levels

• Stakeholders: Customers, Team, Investors, Partners, Public, Regulators

• Impact: None → Minor → Major → Critical

• Click any cell to add communication plan

• Priority order for outreach

• Message templates by stakeholder type

• "Draft Crisis Comms" generator

6. **Rapid Assessment Tools** - Quick analysis:

• **Pre-Mortem Scanner**: "How could this get worse?"

• **Reversibility Test**: Can this decision be undone?

• **Regret Minimization**: Which choice will we least regret?

• **Resource Reality Check**: Do we have what's needed?

• **Second-Order Effects**: What happens next?

• **Bias Detector**: Confirmation, sunk cost, recency

• One-click checks with instant feedback

7. **Crisis Log & Timeline** - Documentation:

• Automatic timeline of all actions taken

• Who did what and when (audit trail)

• Decision rationale captured

• Real-time notes field

• Export crisis report for post-mortem

• Legal documentation support

• "Share with Leadership" button

8. **Post-Crisis Debrief** - Learn & improve:

• What worked? What didn't?

• Decision quality rating

• Time to resolution

• Cost of crisis (if measurable)

• Lessons learned capture

• Process improvements to prevent recurrence

• "Search Crisis Case Studies" for similar situations

Make it look like an emergency command center with:

• Dark theme (black/dark gray background) for focus

• Urgent red accents throughout

• Large, bold typography for critical info

• Alert-style design language

• Countdown timers prominently displayed

• Status indicators with warning colors

• High-contrast text for readability under stress

• Clear CTAs (call-to-action buttons)

• Military/tactical aesthetic

• Minimal decoration, maximum function

• Emergency services inspiration (911 dispatch, NASA control)

• Pulsing alerts for time-sensitive items

• Clear visual hierarchy for rapid scanning

When I click "Search Crisis News" or "Search Crisis Case Studies," use web search to find real-time information about the crisis type, relevant case studies, crisis management best practices, and similar situations with outcomes.

When I click "Upload SOP," allow me to upload existing Standard Operating Procedures (PDF, DOCX, MD, TXT formats). Extract the relevant protocols, checklists, and procedures from the document and integrate them into the crisis framework, replacing generic templates with my organization's specific response procedures.What this does: Provides a structured framework for making fast, high-stakes decisions under pressure—using the military OODA loop and decision trees to cut through panic, with the ability to upload and integrate your own Standard Operating Procedures so the tool follows your organization's established crisis protocols.

What this looks like:

WHERE WE STAND

✅ AI Can Now: Match or beat human workers on about 70% of common professional tasks like making spreadsheets, presentations, and documents, according to OpenAI's new benchmark tests.

❌ Still Can't: Keep learning after being deployed—current AI models are "frozen" once training ends and need expensive full retraining to learn new information.

✅ AI Can Now: Research complex topics across hundreds of sources and produce detailed reports in minutes, with tools like Google's Deep Research agent handling multi-step information gathering automatically.

❌ Still Can't: Reliably avoid making things up during long research tasks—the more decisions an AI makes in a chain, the higher the chance it "hallucinates" at least one wrong answer.

✅ AI Can Now: Simulate real-world physics, geometry, and lighting in real-time to create explorable 3D environments and train robots in virtual scenarios before deploying them in the real world.

❌ Still Can't: Get basic TV show facts right in automated video summaries—Amazon's AI recap confused 2077 for the 1950s and mischaracterized plot points in a show it was supposed to explain.

FROM THE WEB

RECOMMENDED LISTENING/READING/WATCHING

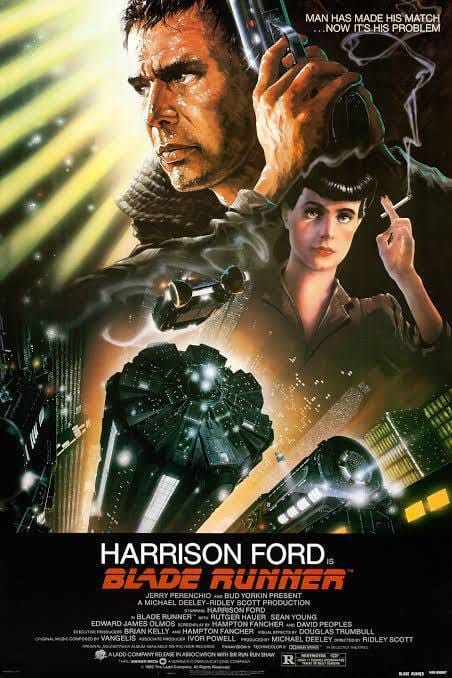

MOVIE: Blade Runner (1982)

Rick Deckard hunts replicants—bioengineered androids built for off-world labor who've come to Earth seeking more life. The replicants are illegal here, and Deckard's job is to retire them. But the longer he spends with them, the harder it gets to see them as just machines.

Ridley Scott's film is a visual masterpiece. The rain-soaked neon streets of future Los Angeles, Vangelis's synth score, the grainy film texture, and Rutger Hauer's "tears in rain" monologue have become iconic. The movie asks whether artificial beings that can feel fear and desire and hope deserve to live, and it doesn't give you an easy answer. Multiple versions exist—the theatrical cut, the director's cut, the final cut—and they each change the story's implications.

Thank you for reading. We’re all beginners in something. With that in mind, your questions and feedback are always welcome and I read every single email!

-James

By the way, this is the link if you liked the content and want to share with a friend.